Detection of bimanual gestures everywhere: why it matters, what we need and what is missing

Software Architecture

Software ArchitectureAbstract

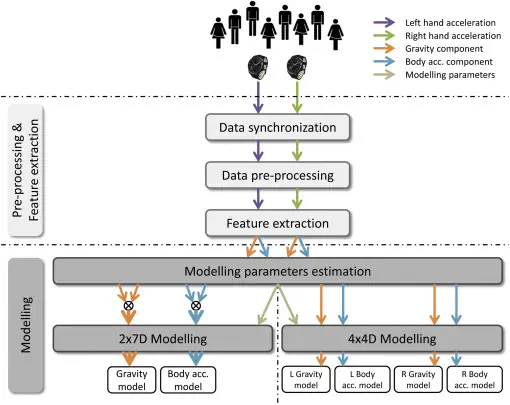

Bimanual gestures are of the utmost importance for the study of motor coordination in humans and in everyday activities. A reliable detection of bimanual gestures in unconstrained environments is fundamental for their clinical study and to assess common activities of daily living. This paper investigates techniques for a reliable, unconstrained detection and classification of bimanual gestures. The work assumes the availability of inertial data originating from the two hands arms, builds upon a previously developed technique for gesture modeling based on Gaussian Mixture Modeling (GMM) and Gaussian Mixture Regression (GMR), and compares different modeling and classification techniques, which are based on a number of assumptions inspired by literature about how bimanual gestures are represented and modeled in the brain. Experiments show results related to 5 everyday bimanual activities, which have been selected on the basis of three main parameters: (not) constraining the two hands by a physical tool, (not) requiring a specific sequence of single-hand gestures, being recursive (or not). In the best performing combination of modeling approach and classification technique, we achieve overall accuracy, precision, recall and F1-score above 80%.